Cloud providers provide network and infrastructure, here is a way for you to protect the software and cloud workloads across all your cloud [and hybrid] environments

Shift Left for Shared Cloud Security

Cloud deployments introduce major new shared security considerations for organizations. This changes some key operational imperatives for development, security, and IT professionals. On one hand, commercial cloud providers’ “Infrastructure as Code” delivers multiple layers and types of network and server security out of the box. Paradoxically, that can create a false sense of security around applications, containers, and workloads—all of which come with vulnerabilities that cloud tools can’t catch. Vulnerabilities replicate at light speed in the cloud via “golden images” that can massively expand the scale of the exploitable attack surface. Often, a significant amount of time passes – in the case of open source, many times it’s years – before a vulnerability is identified and fixed. And once deployed, it’s expensive and time-intensive to patch, remediate, quarantine, or roll back code. So, while cloud providers take ownership for the Infrastructure as Code elements of the stack, dev and devops teams inside the organization become responsible for a broader application security mandate than in traditional pre-cloud environments.

As the slide below illustrates, when outsourcing core infrastructure to the cloud, application and infrastructure security teams must “shift left” to focus on SDLC and CI/CD pipelines they control, and remove as much vulnerability as possible before deployment. Kudos to Snyk for drilling down to what this really takes in practice!

Slide presented at SNYKCON keynote, 10/18/2020

Slide presented at SNYKCON keynote, 10/18/2020

Scanning, testing, and patch management are essential parts of this discipline, but as a RunSafe recent study shows, scanning tools missed as many as 97.5% of known memory vulnerabilities over the last 10 years, as well as 100% of the “unknown unknown” Zero-Day vulnerabilities. And MITRE ranked memory CVEs at the top of their most-recent list of the most dangerous threats to software.

Cloud Workload Protection

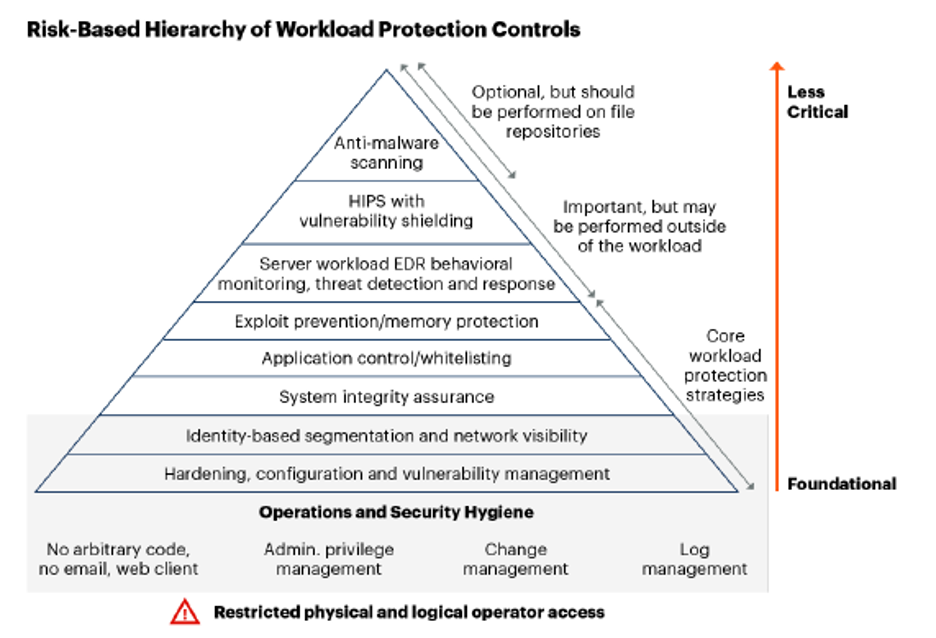

In its recent Cloud Workload Protection Platform (CWPP) research note, Gartner identified memory exploit protection as an essential component of any organization’s CWP strategy (Gartner, Cloud Workload Protection Platforms, 2020):

“Exploit Prevention/Memory Protection–application control solutions are fallible and must be combined with exploit prevention and memory protection capabilities…”—Gartner CWPP 2020

Gartner CWPP, 2020

Gartner CWPP, 2020

Per the report, in the Risk-Based Hierarchy of Workload Protection Controls, exploit prevention/memory protection is a core workload protection strategy:

We consider this a mandatory capability to protect from the scenario in which a vulnerability in a whitelisted application is attacked and where the OS is under the control of the enterprise (for serverless, requiring the cloud providers’ underlying OS to be protected). The injected code runs entirely from memory and doesn’t manifest itself as a separately executed and controllable process (referred to as “fileless malware”). In addition, exploit prevention and memory protection solutions can provide broad protection against attacks, without the overhead of traditional, signature-based antivirus solutions. They can also be used as mitigating controls when patches are not available. Another powerful memory protection approach used by some CWPP offerings is referred to as “moving target defense” — randomizing the OS kernel, libraries and applications so that each system differs in its memory layout to prevent memory-based attacks.

Cloud-Native Application Security

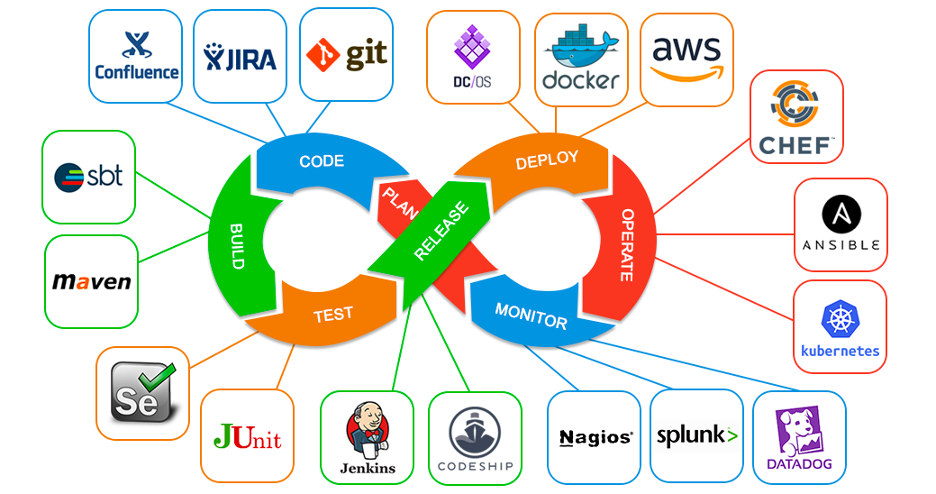

Cloud deployments rely on well-defined devops workflows to create operational efficiency and resilience. Organizations use many different tools and methods to make that happen, but best practices include the components/steps shown below:

https://medium.com/faun/devops-without-devops-tools-3f1deb451b1c

https://medium.com/faun/devops-without-devops-tools-3f1deb451b1c

It’s a good assumption that every new code release contains new memory vulnerabilities. This has been borne out over years of experience, with accelerated release cycles and an increased reliance on open-source components increasing the exposure. Beyond best practices in coding and native IDE functionality, however, there is industry consensus that adding more vulnerability prevention steps to sprint cycles is counter-productive. So, in RunSafe’s view, the most effective DevSecOps strategy is to treat every code object as vulnerable.

Immunization from memory vulnerabilities can be inserted anywhere from the Build through Deploy steps. RunSafe’s Alkemist tools integrate with any devops product in this toolchain via simple RESTful API calls, repo gets, secure containers, or CLI.

This can provide comprehensive memory threat protection for all three universal building blocks of cloud computing:

-

- Code (workloads)

- Proprietary/in-house

- Third-party commercial

- Open Source

- Container (images)

- Cloud—Infrastructure as Code (IaC)

- Code (workloads)

How Can RunSafe Help with Your Cloud Deployment?

RunSafe provides memory protection at the code, container, or workload level for compiled software and firmware. With this protection in place, downstream consumers in the devops workflow can determine them to be “memory safe” relative to unprotected workloads. This enables several DevSecOps advantages:

-

- The binary images in a repo (from Alkemist:Repo or Alkemist:Binary) makes workloads “memory safe.”

- If any cloud workload is not protected, the devops workflow can then add policy actions, depending on its capabilities, to send an alert, increase a risk score or monitoring frequency, isolate or reduce access for the workload, declare the workload untrusted, deny deployment, or initiate a replacement process to get a protected image.

- The workflow logic could go as far as pulling a new build through Alkemist:Source, or pulling an existing binary through Alkemist:Binary. This would most likely involve policy control via GitLab, Jenkins, or another CICD platform.

Adding code immunization into cloud DevSecOps workflows can completely neutralize memory vulnerabilities in any compiled code. This produces significant ROI, since 40-70% of identified vulnerabilities are memory-based, depending on the code stack. The value and importance of this step are even greater today given Synopsys’ most-recent Black Duck Open Source Security finding that 95%+ of new enterprise applications include open source components, which by their nature increase the incidence and persistence of unknown Zero-Day vulnerabilities.