AI is now woven into the everyday workflows of embedded engineers. It writes code, generates tests, reviews logs, and scans for vulnerabilities. But the same tools that speed up development are introducing new risks—many of which can compromise the stability of critical systems.

RunSafe Security’s 2025 AI in Embedded Systems Report, based on a survey of over 200 professionals throughout the US, UK, and Germany working on embedded systems in critical infrastructure, captures the scale of this shift: 80.5% use AI in development, and 83.5% already have AI-generated code running in production.

As AI-generated code becomes a permanent part of embedded software pipelines, embedded systems teams need a clear view of where vulnerabilities are most likely to emerge.

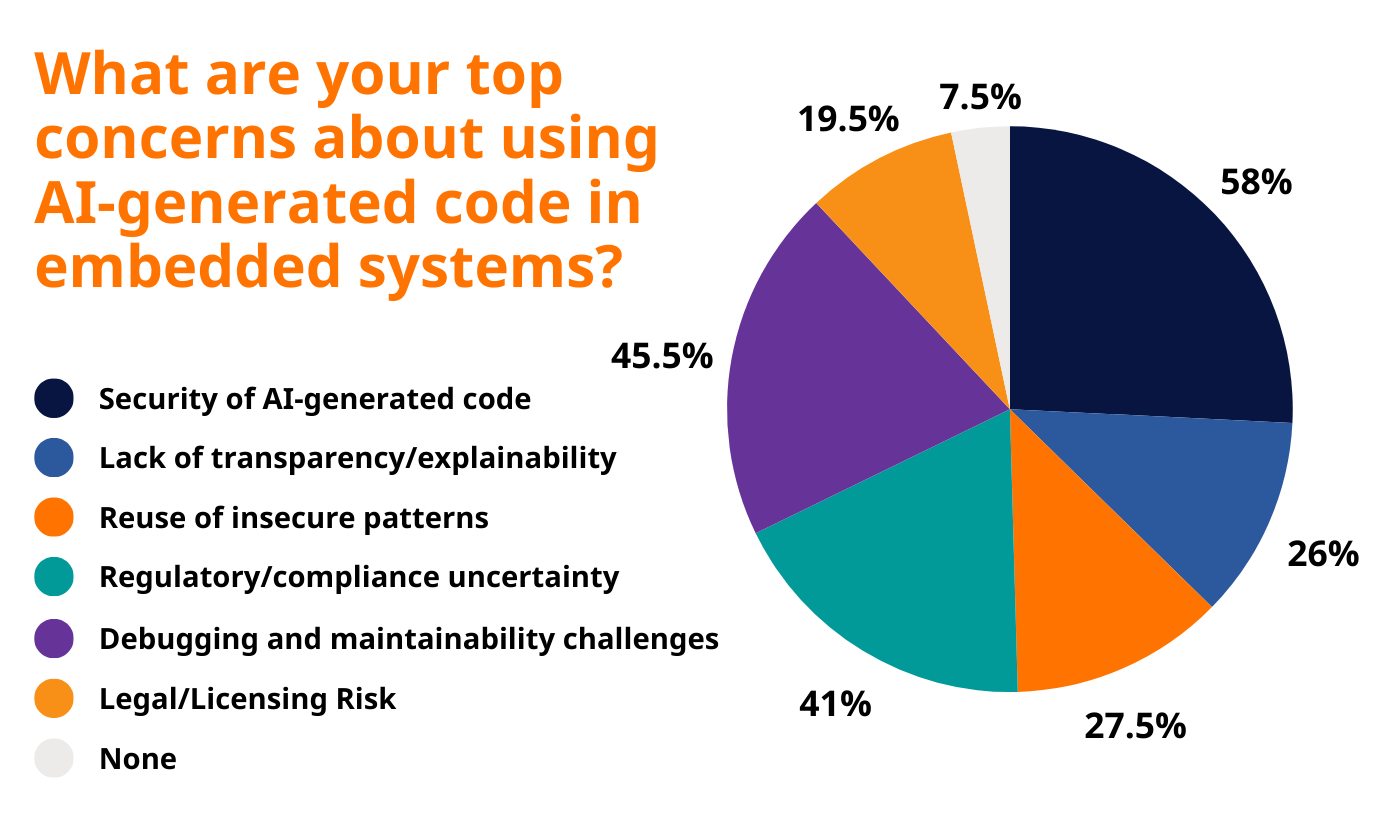

According to respondents, six risks stand out as the most urgent for embedded security in 2025.

Listen to the Audio Overview

The 6 Most Critical Risks of AI-Generated Code

1. Security of AI-Generated Code (53% of respondents)

Security vulnerabilities in AI-generated code remain the single most significant concern among embedded systems professionals. More than half of survey respondents identified this as their primary worry.

AI models are trained on vast repositories of existing code, much of which contains security flaws. When AI generates code, it can replicate and scale these vulnerabilities across multiple systems simultaneously. Unlike human developers, who might introduce a single bug in a single module, AI can reproduce the same flawed pattern across entire codebases.

The challenge is particularly acute in embedded systems, where code often runs on safety-critical devices with limited ability to patch after deployment. A vulnerability in an industrial control system or medical device can persist for years, creating long-term exposure. Traditional code review processes, designed to catch human errors at human speed, struggle to keep pace with the volume and velocity of AI-generated code.

2. Debugging and Maintainability Challenges (45.5% of respondents)

Nearly half of embedded systems professionals worry about the difficulty of debugging and maintaining AI-generated code. When a human engineer writes code, they understand the logic and intent behind every decision. When AI generates code, that understanding often doesn’t transfer.

When bugs appear, tracing their root cause becomes significantly harder. Engineers must reverse-engineer the AI’s logic rather than reviewing their own design decisions. When modifications are needed—whether for new features or security patches—developers must understand code they didn’t write and may struggle to modify without introducing new issues.

In embedded systems, where code often has a lifespan measured in decades, maintainability is critical. If the original code is AI-generated and poorly documented, each maintenance cycle becomes progressively more difficult and error-prone.

3. Regulatory and Compliance Uncertainty (41% of respondents)

41% of survey respondents flagged regulatory and compliance uncertainty as a major concern with AI-generated code. Yet the certification processes organizations rely on today weren’t designed to account for AI-generated code. In regulated industries such as medical devices or aerospace, obtaining code certification requires extensive documentation of design decisions and validation procedures. When AI generates the code, much of that documentation doesn’t exist in traditional forms.

This creates several challenges. Organizations must make their own determinations about what constitutes adequate validation for AI-generated code, with limited regulatory guidance. When incidents occur, the question of liability becomes murky: Who is responsible when AI-generated code fails?

4. Reuse of Insecure Patterns (27.5% of respondents)

AI models learn from existing code, and that code is often flawed. More than a quarter of embedded systems professionals worry that AI tools will perpetuate and scale insecure coding patterns across their systems.

This risk is particularly concerning because it’s systemic rather than isolated. If an AI model is trained on C/C++ code that commonly contains memory safety issues—and C/C++ historically dominates embedded systems—the AI will likely generate code with similar vulnerabilities.

This risk isn’t just theoretical. Memory safety vulnerabilities such as buffer overflows and use-after-free errors account for roughly 60-70% of all security vulnerabilities in embedded software. If AI tools perpetuate these patterns at scale, the industry could see a multiplication of one of its most persistent and exploitable vulnerability classes.

5. Lack of Transparency and Explainability (26% of respondents)

AI-generated code often functions as a black box. The code works, but understanding why it works or why it fails can be extraordinarily difficult. 26% of embedded systems professionals cite this lack of transparency as a significant concern.

In embedded systems, where reliability and safety are paramount, this opacity creates serious problems. Engineers need to understand not just what the code does, but how it handles edge cases, error conditions, and unexpected inputs. With AI-generated code, that understanding is often incomplete or absent.

The survey reveals additional concern about what happens when AI-generated code becomes increasingly bespoke. Historically, when developers used shared libraries, a vulnerability discovered in one place could be patched across an entire ecosystem. If AI generates unique implementations for each deployment, this shared vulnerability intelligence fragments, making collective defense more difficult.

6. Legal and Licensing Risks (19.5% of respondents)

Nearly one in five embedded systems professionals identifies legal and licensing risks as a concern with AI-generated code. AI models are trained on vast amounts of code, much of it open source with specific licensing requirements. When AI generates code, questions arise: Does the output constitute a derivative work? Who owns the copyright to AI-generated code?

These questions remain largely unresolved, and different jurisdictions may answer them differently. For embedded systems manufacturers, this creates software supply chain risk. If AI-generated code inadvertently reproduces proprietary algorithms or patented methods from its training data, manufacturers could face infringement claims.

For organizations in regulated industries or those serving government customers, these legal uncertainties can be deal-breakers. Defense contractors, for example, must provide clear provenance and licensing information for all software components.

Why These Risks Matter for Embedded Systems Teams

The 2025 landscape reveals an industry at a critical juncture. AI has fundamentally changed how embedded software is developed, and that transformation is accelerating. 93.5% of survey respondents expect their use of AI-generated code to increase over the next two years.

But this acceleration is happening faster than security practices have evolved. The tools and processes that worked for human-written code at human speed aren’t designed for AI-generated patterns at machine velocity.

The good news is that awareness is high and investment is following: 91% of organizations plan to increase their embedded software security spend over the next two years.

Understanding these six critical risks provides a roadmap for where design decisions, security investments, and process changes will have the most significant impact. Organizations that address these risks proactively—through better tooling, enhanced testing, runtime protections, and clearer governance—will not only strengthen their systems but also position themselves as industry leaders.

The insights in this post are based on RunSafe Security’s 2025 AI in Embedded Systems Report, a survey of embedded systems professionals across critical infrastructure sectors.

Explore the full report to see the data, trends, and strategic guidance shaping the future of secure embedded systems development.