The EU Cyber Resilience Act (CRA) may not be fully enforced until 2026, but the time to act is now. In this essential episode of our podcast, we dive into the details of the CRA with cybersecurity expert Joseph M. Saunders, Founder and CEO of RunSafe Security.

As software becomes a critical part of nearly every product and service, the CRA places new, sweeping demands on manufacturers, developers, and supply chain stakeholders—introducing real consequences for security failures.

Joe unpacks the origins and intent of the CRA, details the pivotal role of Software Bill of Materials (SBOMs) in compliance and security, and explores how liability is shifting under this regulation. He also offers practical insight into how forward-thinking companies are already preparing for the law’s impact.

We also tackle a critical post-compliance question: If bugs are inevitable, what role will cyber insurance play when vulnerabilities are exploited despite best efforts?

Whether you’re in security, product development, compliance, or leadership, this episode will give you knowledge to stay ahead of the curve.

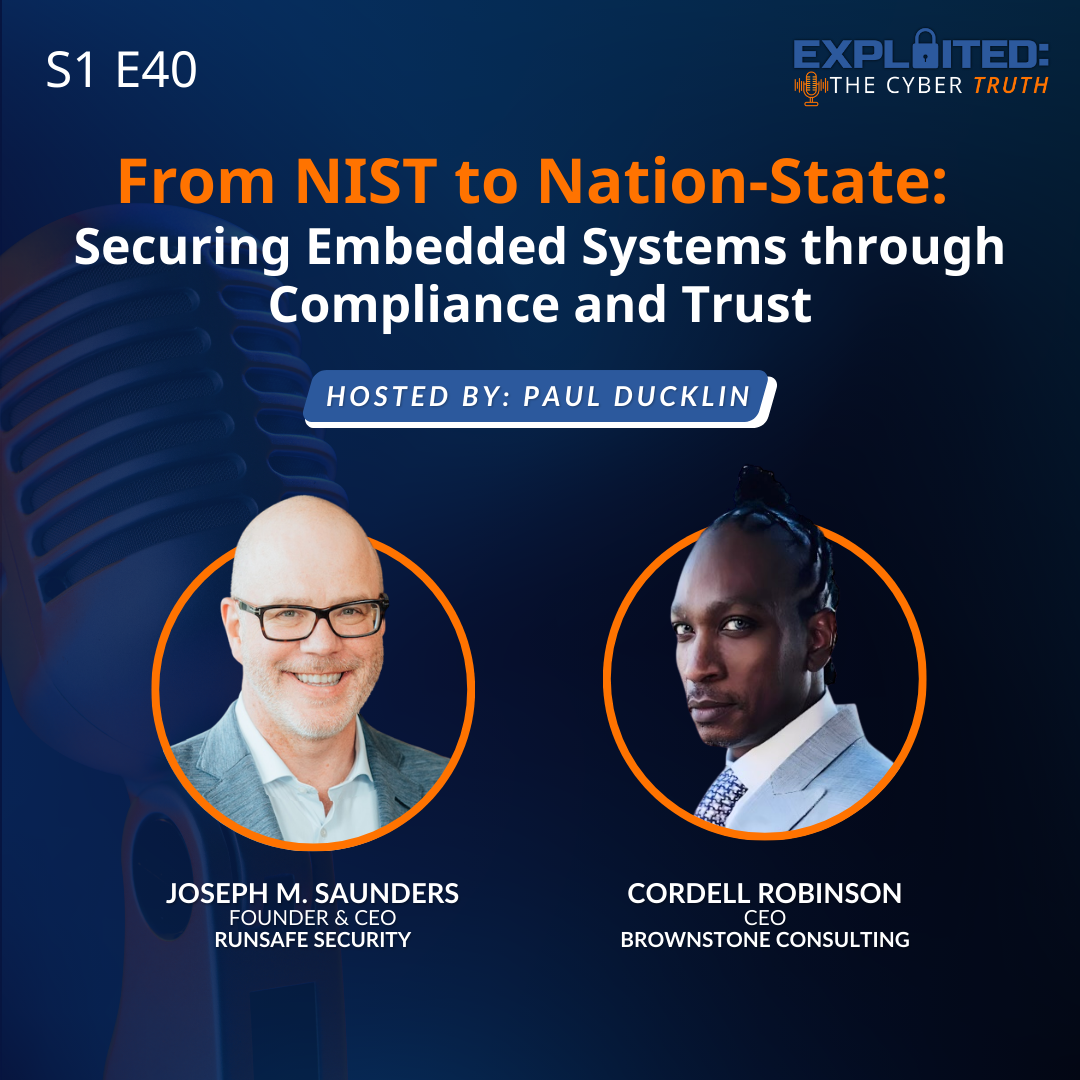

Speakers:

Paul Ducklin: Paul Ducklin is a computer scientist who has been in cybersecurity since the early days of computer viruses, always at the pointy end, variously working as a specialist programmer, malware reverse-engineer, threat researcher, public speaker, and community educator.

His special skill is explaining even the most complex technical matters in plain English, blasting through the smoke-and-mirror hype that often surrounds cybersecurity topics, and helping all of us to raise the bar collectively against cyberattackers.

Joe Saunders: Joe Saunders is the founder and CEO of RunSafe Security, a pioneer in cyberhardening technology for embedded systems and industrial control systems, currently leading a team of former U.S. government cybersecurity specialists with deep knowledge of how attackers operate. With 25 years of experience in national security and cybersecurity, Joe aims to transform the field by challenging outdated assumptions and disrupting hacker economics. He has built and scaled technology for both private and public sector security needs. Joe has advised and supported multiple security companies, including Kaprica Security, Sovereign Intelligence, Distil Networks, and Analyze Corp. He founded Children’s Voice International, a non-profit aiding displaced, abandoned, and trafficked children.

Key topics discussed:

- What the CRA covers and who it affects

- Why SBOMs are central to compliance and trust

- How the law shifts liability for software flaws

- Real-world prep strategies from industry leaders

- The role of cyber insurance in a post-CRA world

Episode Transcript

Exploited: The Cyber Truth, a podcast by RunSafe Security.

[Paul] Welcome back everybody to Exploited: The Cyber Truth. I am Paul Ducklin, and today, I am joined by Joe Saunders, CEO and founder of RunSafe Security. Welcome back, Joe.

[Joe] Hey, Paul. It’s great to be here and look forward to the discussion on this very, very important topic.

[Paul] Yes. So let me tell our listeners what we’re talking about this week, the EU Cyber Resilience Act Exposed. And now by exposed, I don’t mean that in a pejorative sense, like you might expose true crime. I mean digging into the details because there’s an awful lot in it and an awful lot that’s important.

[Paul] But just before I get going, Joe, I’ll just mention that the standard abbreviation for the Cyber Resilience Act is CRA, Charlie Romeo Alpha. Expect to hear that initialism a lot over the next few years. So, Joe, maybe the best place to start is, where did this come from? When does it kick in? And most importantly, to whom does it apply?

[Joe] There’s obviously some history here because you don’t just come up with the Cyber Resilience Act overnight.

[Paul] No.

[Joe] Organizations need a chance to prepare, and people need to be thoughtful about what goes into such a comprehensive act. And so it originated from a European Commission. If you go back to 2022, they proposed the Cyber Resilience Act, and it went through some iterations, some review, and some discussions with different stakeholders along the way.

[Joe] And, ultimately, it was approved in March of 2024. So last year, about twelve months, thirteen months ago, we’ve been hearing more about it once it got approved with the idea that, ultimately, it goes into effect in 2026, and there’s a couple levels of going into effect. So it was published in November of 2024, so we started to see more details just a few months ago. It’ll come into a new phase where you have to start reporting cyber incidents and vulnerabilities in September of 2026. And, really, by December of twenty twenty seven, you need to have all the requirements implemented, including generating a Software Bill of Materials and the like.

[Joe] Who does it affect and who needs to do it? Well, if you are selling goods in the EU and manufacturing goods in the EU and you produce devices of one sort or another that are connected to the Internet, that are cyber enabled, if you will, then you’re likely subject to it. There are maybe a couple exceptions in there where there’s overlap with other regulations in certain industries.

[Paul] That’s right. If you make cars or airplanes or things that are already strictly regulated, then this doesn’t apply as well, presumably because you’re already subject to all these provisions and more.

[Joe] Exactly right. And it makes sense that aviation and automotive would have safety standards to adhere to. We’re all accustomed to that. And lo and behold, here we are with cyber enabled devices that do pose a risk to consumers if things don’t operate, if there’s bugs in them, or if they get compromised. And so it makes sense that a cyber resilience act for everybody else is here right before us for the EU.

[Paul] Yes. So it’s interesting that on the public facing EU page, europa.eu, they’ve chosen to emphasize the fact that this is really about everybody who makes software and hardware, potentially, and that it is actually of value even to people who think, hey, state sponsored attackers or cyber cooks would never be interested in little old me. It says, from baby monitors to smartwatches, products and software that contain a digital component are omnipresent in our daily lives. Now I think we all know that. Here’s the kicker.

[Paul] Less apparent to many users is the security risk that such products and software may present. So it isn’t just about protecting electricity substations, which is clearly important, or water flow weirs, or sewage systems. There is a sense that the devil is in the details, because as we mentioned in the last couple of podcasts, when you look at Volt Typhoon and Salt Typhoon and attackers like that, consumer devices are part of their toolkit. Because if you can’t get in through the big expensive firewall, why not get in through the cheap and cheerful one? The cheap and cheerful one is that the nation state’s friend is what you’re saying.

[Joe] So clearly, all these devices, there are security risks, there’s privacy risks, there’s data rights, There’s all sorts of considerations. But as you imply, there are devices that are connected to the rest of the network where there are still yet other devices. There are a lot of implications to cyberattacks, and I do think part of it for those consumer devices is the safety of consumers and the privacy and the data rights of consumers as well.

[Paul] Absolutely. I think when people think about safety in the context of cybersecurity, they think about important things like, could it set on fire while I’m away?

[Paul] Could I get the electric shock? Could it blow my hand off? But actually, as you say, there’s almost as much at stake, albeit not physical, if your privacy, your identity, even your civil rights, get infringed by somebody with your worst interests at heart. Joe, an intriguing aspect of the CRA is that it does try to be a software and hardware lifetime act, and it explicitly states again on their portal page that the CRA specifies requirements that govern the planning, the design, the development, and the maintenance of products. What in your opinion is both the most notable and the most important?

[Joe] Well, I think the most notable is you do need to commit from start to finish to that whole life cycle. And what we’ve seen in the software development process is it is important to offer updates. We also see that in specific industries like aviation that we mentioned earlier, where there’s this notion of air airworthiness. And in order to maintain airworthiness, you need to maintain systems. And the same is true for the Cyber Resilience Act.

[Joe] In order to maintain security, you need to maintain software. So I think that full commitment to the full life cycle is a very important aspect. I also think that part of this is that you need to work in a cross functional way. This isn’t simply a development team saying, hey, you know, we’ve got this security plan in place. We’re all good.

[Joe] Everyone is good. There’s legal aspects. Are you getting software from suppliers? What language do I put on my documentation when I ship my software? To marketing angles, making sure you’re in compliance with how you display if you’re adopting these standards, to different product features and the like.

[Joe] And so you do need to do testing. You do need to evaluate the components that you have, and you need to do it throughout the full life cycle, as you say, from planning all the way to support and probably starting sooner than people think so that you are well ahead of, you know, the requirements that are here. So I think that full commitment is one one angle, having a plan, starting on time. Those are all basic processes. But you can imagine a security regulation will touch all aspects of a product’s life cycle.

[Joe] And in fact, people may ask the question from time to time, how will the CRA affect this component that we wanna deliver in our product?

[Paul] Joe, I know one of the things that’s near and dear to your heart, and it is something that at least some software vendors and retailers have been notoriously bad at in the past, is the thing we jokingly referred to in the last podcast as software bomb. But it’s not a bomb with a b at the end. It doesn’t mean it’s going to break. It means bill of materials, listed ingredients.

[Paul] It means that like today’s food retailers, you have to know what’s in your products, and you have to state what’s in your products so that it’s absolutely clear to anybody who needs to fix them or manage them or update them or assess their risk in the future.

[Paul] How much effort do you think that will be for vendors and retailers who have just ignored that problem so far?

[Joe] Well, the good news is there are a lot of standard practices today to generate a Software Bill of Materials, but you can see why it’s so important. And I think the point there is part of knowing if you’re in compliance or knowing where the vulnerabilities are, you need to know the exact components that are in that software or that software hardware delivery to know if those things are protected or not. So Software Bill of Materials is actually an essential point to help share across the ecosystem, across the value chain.

[Joe] From, you know, open source software that you’re bringing in, you should know every single component that’s in there. If you outsource development of a component of a product, you want to know every single component that goes into that aspect of the product, and your customers do. So if you prepare that product and then ship it for final assembly, then they need to consider it. And it reminds me of this wonderful story of a manufacturing plant in Detroit, Michigan called the Rouge plant. And I don’t know if you’ve ever heard of this, but in the nineteen thirties, the Rouge plant made every single component from raw materials all the way to a finished good product in the same manufacturing plant.

[Joe] And today, manufacturing is much different. It’s an assembly plant, and you get parts and components from everywhere else. The same is true in the software development industry. It is a complex ecosystem, a complex supply chain, so the Software Bill of Materials is essential at every step of the way to know what’s in the final product when it gets shipped because you need to know at every stage. I think all those distributed software supply chain participants and stakeholders should be adopting Software Bill of Materials today, and there’s great standards around it, but it’s essential that they do it by the time the Cyber Resilience Act takes hold.

[Paul] Yes. Because I imagine if you can’t say what’s in your product, then it would be very difficult with a clear conscience and with legal righteousness to put the c mark on your product and say, oh, yes. I comply with cyber resilience. Because as you mentioned in the last podcast, remember Log4j? The bug in a Java logging library that could affect you even if you didn’t write your software in Java, but you had someone else that you got a module from that you handed data to, and they happen to write it in Java, and they happen to use Log 4j.

[Paul] I think the way you described it last time is when that news hit was just before Christmas, as always. You’d spoke about gazillions of phone calls of people who ought to have had that information at their fingertips, suddenly having to find out from up their supply chain, down their supply chain. They can’t go to Terry in the polishing department in that corner of the Rouge plant because this thing could have come from anywhere.

[Joe] And I do wanna put a plug in for the Rouge plant so anybody who wants to check it out. It’s a magnificent assembly plan today.

[Joe] With that said, you’re exactly right on the Software Bill Materials, and I would I would venture to say if people have adopted a Software Bill Materials, but they’re only doing it to say they’ve done it, they’re missing part of the point because exactly what you said, that information that, is demonstrated from a Software Bill of Materials is actually a foundational element to help you think about your security strategies. So why not start there? Find out everything that’s in your software and then find out what you need to fix. Are there bugs? Are there vulnerabilities?

[Joe] And use that as a guidepost. Software Bill Materials could be used as, oh, it’s a compliance thing. I like to try to encourage people to think about it as, hey. It’s a new journey to elevate your security posture even higher by really knowing exactly what’s in all those components in your final product.

[Paul] So, Joe, one of the other things that will change possibly significantly, particularly for software vendors who haven’t really had to face this directly before, is the change in regulations relating to liability.

[Joe] Yeah. I mean, the liability is actually, of course, the stick in this case. Sometimes we like to see carrots. Sometimes sticks are necessary. This stick in particular, if you have to pay a fine for not really following through on a cyber requirement that would protect your devices, then you’re gonna be even more cautious about making sure you have the processes in place to demonstrate that you are doing the right things.

[Joe] Having a risk of a penalty up to, say, €10,000,000, that’s a pretty significant fine for product companies to face. And that can be compounding if you’re found with other vulnerabilities and liabilities that result of you not enacting or adopting the right principles to ensure that you’re in compliance with the Cyber Resilience Act. The liability portion is, in fact, unfortunately, a necessary thing to really get widespread adoption. I’m sure there’s people who don’t like it, but imagine the business case of saying, I’m gonna risk never having a software bug, never having a cyberattack, and I’ll just take the fine somewhere down the road. That doesn’t make sense.

[Joe] So that’s why the fine is so steep. And instead, if you can put positive resource into your cyber program, actually, what might happen is you may have better code quality as a result, not just fewer vulnerabilities, but higher code quality, better practices, more mature software development practices, then you’re gonna get a lot of gain out of that in terms of improving your product features down the road, improving your ability to respond, improving your ability to fix things, improving your ability to do updates. If people look at it as a negative and a stick as I joke about, that’s almost unfortunate. Look at it as an opportunity to improve your processes, and if you do so, you’ll be better off and perhaps even more competitive going forward because you have better processes in place. Yes.

[Paul] I can see why the naysayers would go, wow, you’re going to fine me up to two and a half percent of my global turnover just for a glitch in my software. That sounds extreme. I sort of get that point. Although, my understanding is that you don’t just get a 2.5% of turnover fine for every single infringement there are scales. But I guess the flip side of that is to say, do you mean that you’re not prepared to put your money where your mouth is and sell me a product that you expect me to rely upon, say, an Internet doorbell or a webcam that could be used to spy on my family or my business.

[Paul] So I can see both sides of that coin. But it doesn’t really matter what you think, that’s embedded in the law, isn’t it?

[Joe] Yeah. 100 %. And, you know, I think these are the definitions of public goods where having security, having confidence in a product that your privacy won’t be compromised or it won’t malfunction, having standards, having expectations.

[Joe] Without that, in the digital age, with all the complexity of software and all the risk that you might have, it’d be very hard to differentiate claims from one brand to another, and so this creates a level playing field in that regard. And I know it could be perceived as too onerous, but there’s a lot at stake from a cyber perspective that could change what we have come accustomed to in a well functioning society. And I think having solid security standards does, in fact, help maintain a well functioning society.

[Paul] I agree because I think we’ve got to the state, at least with some suppliers of software and hardware, that everyone’s ready to say what they mean. And what the CRA says is, you have to mean what you say too.

[Paul] You can’t just put out the marketing guff. You have to actually back that up. Now, Joe, we talked about how the costs could be seen as onerous for some people, particularly for small businesses. And nobody felt this more keenly in the early days when this act was still a bill and still being discussed than the open source community. And they were quite alarmed at first because they figured, we don’t get paid for this.

[Paul] We even give you the source code. And if you choose to accept it, then why should we carry the liability? And you didn’t consult with us even though our code goes into 70% of the product sold in Europe. You only spoke to the commercial players. My understanding is that the EU regulators actually came to the party and reached very much what can be described as peace with honor with the open source community.

[Joe] Yeah. And the open source community does a tremendous service. If you think about if every product needed to have I’ll just make something up, like a calculator built in. It doesn’t make sense that every product manufacturer should build from the ground up calculator functionality for their product. And I just use that as an example, not that calculators are the most common open source components.

[Joe] And so the open source community provides tremendous benefit. And there’s also a lot because they get distribution of their software, there’s a lot of feedback on it and it’s very secure because you get all this feedback through all this use. It doesn’t mean that they should also carry the liability. There has been developed great practices for testing software you receive from third parties, including open source. And so I think the product manufacturers or those who are assembling the final products do have a duty to test the software they get from other sources, and it does make sense that if you choose to accept open source software, that you’re also accepting that it too may have flaws in it.

[Joe] Who’s responsible ultimately for the security of that final product? I think it’s the final product who sells it out to the public.

[Paul] When the CRA kicks in, you will be obliged to state clearly in advance how long you plan to support it for. If you plan to leave your customers hanging in just a few short months, you have to put that in writing upfront, basically.

[Joe] Yeah.

[Joe] And it’s a good thing. I mean, we want mature products that are maintained. I go back to car dealerships exist to repair things in automobiles after they drive off the assembly line. Nobody would buy a car, a brand new car, if it wasn’t if there was no place to have it serviced or maintained going forward. The reality is that the same is true in software.

[Joe] You can’t just produce one version of software and expect that it’s gonna last forever without bugs being found. Even the best software development organizations, I like to point out Google and Microsoft and Lockheed Martin. I would even throw in folks like Netflix and Facebook and these other organizations. They find software bugs, and they’re really good at software development. We do need to have a commitment to fixing problems.

[Joe] In the Cyber Resilience Act with liability, there’s a serious commitment. If you wanna produce a product, you’ve gotta maintain it, and I think it’s a great thing.

[Paul] So, Joe, what do you think are the hardest things that a software vendor who hasn’t confronted any of this before will need to do? What’s the best place to start in your opinion?

[Joe] Yeah.

[Joe] I do think the commitment across the full life cycle is key. I do think the cross functional commitment, as I said earlier, is also key. I do think having a mature software development life cycle process is probably the most important thing. And if you haven’t invested in automated tools, automated build tools, automated deployment tools, if you haven’t gotten used to doing updates on a consistent basis, you need to get that stuff in order. So I would make sure you’ve got a robust software development life cycle consistent with things like we talked about last time, Secure by Design.

[Joe] But also, I think a key aspect of that is to start with the Software Bill of Materials and find out everything along the value chain that you’re including in your software and understanding the risk there. And that’d be a very, very simple place to start. How much exposure do I have today? And what is that gap? How long does it take me normally to fix those bugs, those vulnerabilities, and start to plan accordingly.

[Joe] If you’re really good at it and you can fix all that stuff in a month’s time, well, maybe you can wait until a month before the program’s required. But most organizations cannot do that. So I would get started early. I would generate a Software Bill of Materials. I would look at all the vulnerabilities across my entire ecosystem and I would start to prioritize from there how to improve my software development process, how to do updates, how to fix bugs, and look where are the weak links in my processes so that when you get to the end state, you have done an exhaustive review of the areas that that need the most improvement.

[Joe] So it’s probably not the same for every organization, but the starting point of looking at vulnerabilities and where they’re introduced in your software supply chain or in your supply chain in general is probably one of the most important things you can find out at the start to help you prioritize where to put your effort in so you’re not caught off guard when the CRA goes into full effect.

[Paul] And it’s not just that you need a bill of materials for the components that get built into your software, although obviously, you absolutely need to know that. You also need to understand the bill of materials for the process that builds your software. Because, as we’ve seen many times in the past, when state sponsored actors particularly want to poison a project so that it will bleed into lots of other projects downstream, typically, they don’t try to change the source code of the product itself because everyone’s watching there. They try and introduce something malevolent into the process of building the software in the first place, so the software can come out fine at the end.

[Paul] And everyone goes, oh, look, it’s perfect. But along the way, you’ve actually tainted your build environment, or you’ve opened up a backdoor that means that somebody else can come in and wander around and plunder all your stuff in the future.

[Joe] And I don’t know if you know this or not, but I’ll say it if you don’t. If you go back a couple years, GCHQ found in Huawei routers that exact problem. It was the same exact source code, but lo and behold, depending on the build system you used, there was a backdoor or there wasn’t a backdoor, and it was the exact same source code going in.

[Joe] And that was precisely what GCHQ found in Huawei routers that were exposing infrastructure a few years back. With that said, you’re exactly right. You know, when you think about a Software Bill of Materials, if you just built it from the source and said, here’s my source code, I’ll tell you everything that’s going to go into the binary after I compile it, then you’re not going to be right. The source code isn’t the only contributor to the end state components. If you just look at the binary and you want to derive what’s in it, you’re going to miss stuff because you rely on heuristics.

[Joe] You don’t really see all the raw ingredients as they go into the binary. And as you say, the compilation process, the build systems, they may be grabbing other components and putting in libraries here and there. You actually do need a build time SBOM to have perfect visibility into a Software Bill of Materials if you truly wanna have a 100% precise, Software Bill of Materials for your software.

[Paul] Just a quick note about something else that I believe is in the CRA, and that is that it won’t be allowed anymore to say to your customers, oh, well, if you want a security fix, you have to take all these feature advancements as well. So you can’t use a security fix as a sort of bait to lure people to take the latest and greatest version of your product if they don’t want to.

[Paul] If they have a version that you’ve said you’re going to support, you have to be able to support it with or without feature fixes. That is my understanding, which seems to be a very reasonable provision given that when you add new features to a product, you typically add a whole new set of bugs at the same time, and it’s very hard to avoid that.

[Joe] 100% right. And I would say, you know, I have seen over time a lot of product manufacturers who will use security as the reason to cause, say, an industrial partner to buy new products because they’re more secure. And we don’t want that in the CRA, and we don’t want to mix in other features that could cause other vulnerabilities.

[Joe] And having those separated makes a lot of sense. Ultimately, products will be phased out, but if they’re not in the phase out period, forcing someone to take on a new feature they don’t want does put too much pressure, too much power in the manufacturer’s hand, that could lead to unintended consequences. So I think it’s a great thing to separate them and let those stand, on their own merits. We have security updates to make sure you’re safe. We have product updates to make sure you’ve got the best features, and you can decide as the consumer when to apply those.

[Paul] So now if I may, Joe, I’ll try that $64,000 question. Even if you are entirely compliant, even if you have the best software development practices in the world, even if you have a complete bill of materials for everything so you know absolutely every speck of cumin that’s going into the recipe, you’re still likely to have bugs somewhere. Now suddenly, you have this greater liability. You have this greater business risk. How do you get around that?

[Joe] Well, I do think the answer to your question is in building the right processes to support software updates. And so should you want to deliver these products, you do need to commit to that. And I think handling the software updates is a key aspect and building processes that allow you to administer updates easily, quickly are a good thing. But it also means you need to test software before you release it. And on devices that are on persons, on cars, on infrastructure, scanners, and and other things that are or sensors around energy facility or what have you.

[Joe] These are vital ways to keep infrastructure operating. You do need and expect that folks will test that software, and we do need to expect that people have the processes to update that software sufficiently. And with that said, I do think taking a defense in-depth approach and taking a risk management approach so that you have identified all the highest priority items and you’ve handled those and you have good software development life cycle practices, then you’re gonna be in a really strong spot to stay in compliance.

[Paul] So where do you think then something like cyber insurance will come in? Assuming that you’ve already done reasonably what you could, so you’re not taking the insurance as an excuse for doing things badly.

[Paul] Is that something that you think software companies will find useful and that can help them through any possible liability they might have that they didn’t have before?

[Joe] 100%, I think, cyber insurance for products is a great idea. And I wanna give a historical example to make my point, if I may. There was, in the eighteen hundreds, a big problem with boilers exploding. If you install the boiler incorrectly, boilers would explode.

[Joe] The company that figured out how to solve the problem offered insurance. The key to the insurance was having a really robust prescriptive way to say, if you’ve installed it exactly like this, we guarantee it won’t explode. And that was an engineering innovation solution, and that company cornered the market. I wish I had the details. I’d be happy to, maybe we can include it in the description at some point or or as a follow-up.

[Joe] With that said, the idea that you can specify exactly what needs to happen in order to avoid a really grave consequence, and if you do, you get you you will be insured. It’s almost like marrying an engineering process with cyber insurance. And if you figure out what steps everyone needs to do and say if you follow those steps, then, we won’t have boilers explode, and we won’t have, you know, watches on your wrist catch on fire.

[Paul] Yes. It needn’t be a negative, need it.

[Paul] For example, in The United Kingdom, it’s compulsory to have insurance on any motorized vehicle that you use on a public road, and not having it is considered a serious offense. You find as you get to know people who’ve lived under this regulation for many years, that although the insurance is expensive and maybe they resent it a little bit, they don’t use it as an excuse to go out and drive badly. Hey, I’ve got the insurance. Now I can just let rip. In other words, it does seem to work for the greater good of all, and I don’t see why that shouldn’t work out in software engineering and related fields as well.

[Paul] Thank you, Joe. You’ve obviously considered this in great detail, not least because you run a company that not only makes software, but makes software that helps other people’s software be safer. So it’s fantastic to hear how much thought it is possible to put in this and how much you can get out of it. Thanks to everybody who tuned in and listened. That’s a wrap for this episode of Exploited the Cyber Truth.

[Paul] If you found this podcast insightful, be sure to subscribe and share it with everyone else in your team. And remember folks, stay ahead of the threat. See you next time.