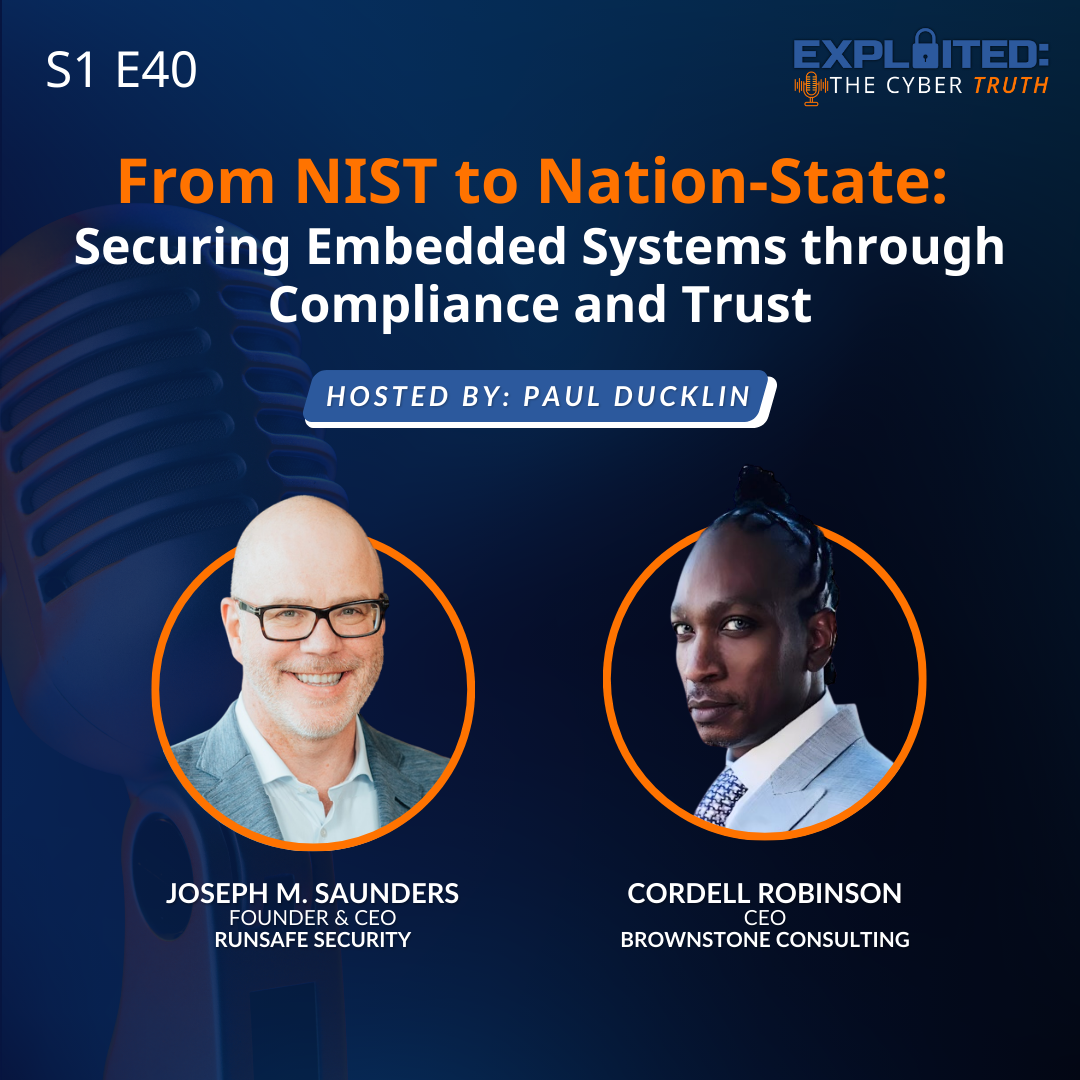

As nation-state cyber threats grow more strategic, the United States’ industrial control systems (ICS) and operational technology (OT) are facing mounting pressure. In this episode, Exploited: The Cyber Truth host Paul Ducklin is joined by RunSafe Security CEO Joe Saunders to explore the very real threat Iranian hackers pose to U.S. critical infrastructure.

Joe unpacks recent reports about Iranian-linked actors like CyberAv3ngers targeting human-machine interfaces (HMIs) and programmable logic controllers (PLCs) used in utilities, manufacturing, and healthcare. These attacks are disturbingly effective—not because they’re highly sophisticated, but because many devices are still running with default credentials and out-of-date software.

Listeners will learn:

- How attackers gain access to and manipulate ICS/OT systems

- What small and rural municipalities are up against when it comes to cyber defense

- Why secure development practices like SBOMs and runtime protections are critical

- How attackers use persistence as a weapon and how defenders can flip that script

Joe also introduces the concept of a National Cyber Guard, explores the role of public-private partnerships, and advocates for a culture of Secure by Design in critical infrastructure technology. This is a must-listen for cybersecurity professionals, OEMs, policymakers, and anyone invested in the resilience of America’s most vital systems.

Speakers:

Paul Ducklin: Paul Ducklin is a computer scientist who has been in cybersecurity since the early days of computer viruses, always at the pointy end, variously working as a specialist programmer, malware reverse-engineer, threat researcher, public speaker, and community educator.

His special skill is explaining even the most complex technical matters in plain English, blasting through the smoke-and-mirror hype that often surrounds cybersecurity topics, and helping all of us to raise the bar collectively against cyberattackers.

Joe Saunders: Joe Saunders is the founder and CEO of RunSafe Security, a pioneer in cyberhardening technology for embedded systems and industrial control systems, currently leading a team of former U.S. government cybersecurity specialists with deep knowledge of how attackers operate. With 25 years of experience in national security and cybersecurity, Joe aims to transform the field by challenging outdated assumptions and disrupting hacker economics. He has built and scaled technology for both private and public sector security needs. Joe has advised and supported multiple security companies, including Kaprica Security, Sovereign Intelligence, Distil Networks, and Analyze Corp. He founded Children’s Voice International, a non-profit aiding displaced, abandoned, and trafficked children.

Episode Transcript

Exploited: The Cyber Truth, a podcast by RunSafe Security.

[Paul] Welcome to Exploited: The Cyber Truth. I am Paul Duklin. I’m joined by Joe Saunders, CEO and Founder of RunSafe Security. Hello, Joe. Welcome back.

[Joe] Hi there, Paul. Great to be back.

[Paul] An intriguing, interesting, important, and worrying topic all in equal measure for this episode, Joe, Iranian hackers and the threat to US critical infrastructure. Let me start at the beginning and ask you to give us an overview of the recent reports that have come from the US government about Iranian attackers targeting specifically industrial control systems and operational technology rather than just general hacking like, hey, let’s do some ransomware and keep the money because it’s foreign exchange. What are the key takeaways from all of that?

[Joe] Well, from an overview perspective, we have this cyber actor group called CyberAv3ngers, and that’s Av3ngers with the three in it, not an e.

[Paul] Let’s not confuse them with the television and film franchise of the same name.

[Joe] Yes.

[Joe] So these are the Iranian related cyber actors affiliated with the Islamic Revolutionary Guard Corps. And of course, the IRGC operates both internally and externally, and they have affiliated cyber actors doing stuff. And in this case, it appears the CyberAv3ngers who have been around operating for a few years now have been targeting aspects of US critical infrastructure and specifically targeting our good friends, the human machine interface, otherwise known as HMI, and the PLCs, the programmable logic controllers that help operate these OT systems inside critical infrastructure.

[Paul] So they’re not necessarily targeting the valve actuator itself. They’re targeting the little LCD control panel that may have been built twenty three years ago with a few user interface buttons on it that let you do things like open valve, close valve, increase flow rate, etcetera.

[Joe] That’s exactly what they’re doing in this particular case or in these reports. Unfortunately, there was a manufacturer of these kinds of devices, in this case, Unitronic’s PLC, And their devices, unfortunately, didn’t have the best security protections in the first place.

[Paul] That’s very diplomatically put, Joe.

[Joe] And we’ll get into it in more detail, I suppose. So the CyberAv3ngers, I guess, saw an opportunity.

[Joe] And, in fact, working on behalf of the IRGC, certainly motivated by calling attention to any conflict that Iran and Israel has had over the past couple years. So IRGC, working in conjunction with these cyber actors, doing things to undermine the credibility of Israel in different ways. And so targeting US infrastructures, targeting even UK infrastructure and other areas around the world is something that the CyberAv3ngers are doing.

[Paul] So part of this is kind of showmanship scary PR, but you have to assume that the other part of it is, hey, if we can find exploitable vulnerabilities, maybe there’s a time where we’ll want to actually press the button that actuates the valve at the wrong time.

[Joe] We’ve seen that in US critical infrastructure where we find cyber bombs, if you will, implanted in US critical infrastructure that could be detonated at a time of the choosing of the bad actor behind them.

[Paul] And those could be at multiple levels, couldn’t they? It could be some flaw in the actuator itself, just thinking of valves. It could be a problem in the HMI that allows it to be triggered when it shouldn’t be, or it could be something in the IT part of the system where there’s some software that’s supposed simply to take stock of the settings in the network, but turns out to have an API bug that means can actually be used to take control rather than just to read out data. So, Joe, do you want to say something about the particular methods that these threat actors have been using?

[Joe] Yeah.

[Joe] So in these particular cases, the CyberAv3ngers were leveraging factory installed standard passwords.

[Paul] Oh, dear.

[Joe] But then what they did is they figured out what else can we do once we get on device from there to disrupt operations. They were modifying different files in the file system, doing things that would fall back to previous versions, and eliminating certain communications ports.

[Paul] So they could actually, if you like, reintroduce bugs that had been patched in the past?

[Joe] Reintroduce bugs and then lock out administrators from gaining access.

[Paul] Oh, so they’ve got root access because they’ve broken in, and then they very carefully closed the door behind them.

[Joe] Yep. They disabled things so you couldn’t upload or download things, and you prevent the operator from getting back into it. Lots of concerns in 2024 about which public utilities were at risk, and the local water companies, in this case, were the ones that were exposed.

[Joe] It creates a big problem when you have these smaller water systems, perhaps in rural areas, perhaps in major cities, perhaps in suburbs and exurbs in between. But what is the right level of response from a federal government, from law enforcement to help mitigate? What happened was affecting local systems who may not have been aware of what techniques were being employed in the first place.

[Paul] Yes. It’s always a difficult thing to balance blame, isn’t it? Clearly, the attackers shouldn’t be doing this, and it’s both criminal and dangerous in equal measure. At the same time, you think, well, to what extent do the people who either build or sell or procure these devices that aren’t secure, how much more could or should they be doing? You don’t want to blame the victim, but you kinda think maybe we need to build an environment where people are less likely to be attackable in the first place. So how do you go about dealing with that? Particularly, as you say, in small towns and small municipalities?

[Paul] They don’t have much money at all. They’re not commercial enterprises that can go, hey, we’ll put the price of tomatoes up a bit, and we’ll take some of those profits and we’ll spend it on cybersecurity.

[Joe] Yeah. We all appreciate a well functioning society, especially when something goes wrong like this.

[Paul] Indeed.

[Joe] These are vital systems. These are public services that help us with the level of comfort we have in our lives today. These organizations, I think immediately, the options that they had were to disconnect those devices from the Internet, update all the passwords on those PLCs and HMIs. And even updating software systems to more current versions.

[Joe] You know, a lot of these systems don’t end up getting updated in a timely fashion. And so those were immediate steps, but then you ask yourself, okay. What else should have been done or could have been done? And unfortunately, when you ask that question after an incident like this, the general response is everything you can possibly imagine should be done. And you could probably list out 50 different things that the manufacturer and the distributor and the operator and the government should all be doing.

[Joe] And for me, it comes back to the basic fundamentals of those technology providers and those product manufacturers and what they ought to be doing in their devices in the first place. And of course, an obvious one there is not to just have default passwords made available.

[Paul] Hallelujah. Yes. I can see why vendors do it.

[Paul] It means you get the device, there’s a little ticket in there that’s the same for everyone says, log in as admin password admin, and then set up the device following these steps. The problem is that if you neglect to do those steps, the device still works properly. And I think you should assume that every default password ever programmed into any device ever made is on a list that every cybercriminal and every state sponsored actor already has in their pocket. If you think of it like that, it’s pretty obvious why you should not have working passwords that are the same for everybody.

[Joe] I was thinking about it. Did you know, Paul, in many cars, if you disconnect the battery, there could be an anti theft provision in there for the radio, and the radio may not work anymore. And guess what the answer is? It is to enter in a default password that’s the same for all those radios produced in that same model.

[Paul] Let me guess. It starts with zero.

[Joe] Mine starts with a five. But it’s the same concept. Right? You know, you need a way to try to slow people down, but you have to make it accessible for support reasons and others.

[Paul] Exactly. We spoke about that in the podcast with Leslie Grandy, didn’t we? Where she said, you have to be really careful that you don’t knit a security straight jacket so restrictive that people go, I’m just gonna find my way around it. It’s too hard.

[Joe] And for me, that begins with those product manufacturers and the culture they set around quality, around safety, around security, and most importantly, perhaps, on the overall software development process to enable those core pillars of good business for people who serve technology, the critical infrastructure.

[Paul] So that’s SDLC. Right? Secure development life cycle. We don’t use that old school waterfall model where you do a whole load of development, then you do the testing. Oh, we found some bugs. Let’s stick some polyfiller in the cracks.

[Paul] Let’s sand it back nicely. Paint over it. She’ll be right. Because she probably won’t.

[Joe] And I do believe that if you don’t upgrade your SDLC, if you don’t bring it forward into a more security minded set of practices, at some point, that means that your devices are not secure, and that means there’s room for other products to disrupt your marketplace, to disrupt your customer base, and bring something that is more secure that may even have more features.

[Joe] I think organizations that have weak security practices have a competitive threat. I think it’s fundamental that these organizations embrace a more secure development life cycle and build security into their products, build in all these automated practices that I’ve talked about over time. And it’s really looking out for your customer’s interest in the end because there are well motivated nation state actors like the ones affiliated with IRGC who will find exposure, and they do look for those organizations, those products that have weaknesses.

[Paul] So from a community, from a social, from a society point of view, you should want to do it. From a legal and ethical point of view, you ought to do it. But the flip side of that coin is increasingly market forces may actually compel you to do it because customers are showing that they prefer products that do take security seriously. And that the recent RunSafe healthcare survey of medical device acquirers showed that strongly, didn’t it? Was it almost 50% of people you surveyed in the medical industry said, we had products that we would have loved to buy because it’d be great for health care, but we said, no, we’re not buying it. Not good enough from a security point of view. So you can have the most fantastical surgical robot, but you might not be able to sell it if it’s not secure enough.

[Joe] And I think this is where programs like Secure by Design emanate from. The idea that you do need to build in security for the benefit of your customers. Let’s also then look at it from the operator’s perspective. They may have limited resources as we said.

[Joe] Some of these wastewater systems in smaller jurisdictions may serve 2,000 households or maybe 500 households. And they might have an IT person who’s looking after OT systems and security and internal systems and all the workstations in the enterprise.

[Paul] And helping the next municipality and the three unincorporated towns around.

[Joe] And it’s a big job for anybody. Right?

[Joe] And there’s a lot of responsibility. And so I do think then from that perspective, from the operator’s perspective, we’ve talked about the notion of Secure by Demand asking about the security practices from your suppliers, and that gets to the health care report that you talked about.

[Paul] And it’s not being difficult, is it? It’s not just getting the price down because I don’t think your security is good enough. It’s just saying, if you can bring me security, then I’m naturally going to be more likely to buy your product.

[Joe] Yeah. And as they say, it takes two to tango. So supplier and operator need to work in concert. Again, going back to the wastewater system example, so that’s the operator side and the product manufacturer side. And then there’s still this effect on the society, the town, the city, and all the citizens there.

[Joe] And so then, there is this extra question of what is the role that the government ought to be doing. And that’s actually an interesting question in this particular case because there was legislation passed since then to help the US government provide grants and assistance to wastewater systems and and other local utilities to help bolster or assess their systems and do that in a way that allows them to get resources or access to resources that they wouldn’t otherwise have. So that’s yet another response that has resulted from these events because of the nature of what was targeted.

[Paul] I guess some of these HMI systems that were targeted may be general purpose. They may not just be there to control valves or water supply or water drainage systems.

[Paul] They might be the same systems that could also be used to control a lathe, to control an automated cutting machine. And when you think about the name PLC, programmable logic controller? The whole idea is that you can reprogram them so that they can run your Christmas tree lights, but they could also run the street lights of a small town. So if the wrong person can reprogram it, game over.

[Joe] Yeah. The digitization of these switches to enable the ability to control things, to monitor things in a more efficient way is ultimately the goal. And then the connectivity that resulted from all that is what exposes these digitally enabled controllers and switches and whatnot to be targeted in the first place. And then when you have a distributed system with lots of customers and you have support considerations, well, you end up finding some examples where there are default passwords put in play. And that, in this case, came at a serious cost. Part of it is just how can we prevent the next attack, and part of it is what does the wastewater system team do to respond to the existing attack. And I still think there’s other philosophical ways that these things can be approached going forward.

[Joe] After these events, there was yet another consideration that some people had. And as we went into a new administration, some other ideas were bubbling up as ways to address these things, and that was to have some kind of incident response team that could be deployed quickly on behalf of the government to some of these systems that do get compromised. The idea being that there will still always be attacks on other systems and how do we get mobilized forces out there quickly and get those. Should those be volunteers? Should those be private companies? Or should we have people at the ready?

[Paul] So that’s like a concept of a first responder like you might have for bushfires or home fires or road traffic accidents.

[Joe] And I’m just speculating now. These things are not real, what I’m about to say. But what if you had the National Cyber Guard?

[Joe] Or what if you had programs with the local universities? So you have students learning their cyber skills and cyber crafts and their computer science skills. Instead of volunteering for EMT, you’re volunteering for the cyber EMT.

[Paul] Yes.

[Joe] You know, it’s great opportunity to build up skills for students and could provide a great service in certain areas.

[Paul] Well, if you think about medical degrees, most countries have one year out of a medical degree will be some kind of residency or internship. You’re learning, but your lectures actually happen in the ward while you’re following doctors and nurses around and being a kind of entry level nurse and doctor at the same time. Maybe that’s a kind of apprenticeship model for the cybersecurity industry, that you’re not just out there interning at some big commercial company in the hope of getting a job. You’re actually part of an emergency response team where you will learn to fight the good fight, sometimes in quite difficult circumstances.

[Joe] And the trick then, bringing it back to CyberAv3ngers.

[Joe] These are highly skilled, well trained cyber actors looking for systemic vulnerabilities where they can wreak some havoc. It may be that they are, in some cases, doing ransomware for purposes of raising money. In other ways, maybe they’re motivated by trying to undermine government in different ways. And so with the IRGC, the US is certainly a cyber target for trying to attack critical infrastructure. And that’s what we saw in these attacks with the CyberAv3ngers on wastewater systems.

[Paul] So, Joe, what are some of the immediate steps that both vendors and consumers, municipalities, for example? What are the simple immediate steps that they could take to improve security in ICS or OT systems?

[Joe] One of my strongest recommendations is for these organizations to simply ask for a Software Bill of Materials that includes known vulnerabilities associated with the underlying software components.

[Paul] So that’s the recipe, the complete recipe that goes into the cake, including all the things that maybe you wish you hadn’t actually put in there when you baked it.

[Joe] Yeah. Those little morsels that could turn into some problem down the road.

[Paul] Three dead flies. Sorry about that.

[Joe] Three dead flies.

[Paul] I’m getting a bad feeling in my throat now.

[Paul] But that’s an important part of that whole process, isn’t it? Your attitude to vulnerability disclosure. What happens when something is found? That needs to go into what is effectively the bill of materials. Right.

[Paul] So that if somebody needs to chase down the dead flies, they know where to start looking.

[Joe] Yeah.

[Joe] So, ultimately, my point is with those kinds of ingredients with an understanding of those components and the vulnerabilities with it, you start to get your arms around where the more significant problem areas are that you may wanna focus in on. And then from there, I do think it’s okay then to start to ask those vendors, what are your security protections and what kind of security do you built in? And what are you doing to prevent attacks even when a patch is not available?

[Joe] How can you help me manage my OT network and my OT systems? I say start with the Software Bill Materials, then start to work with your priority vendors where you see opportunity to reduce risk, and then start to ask them those questions about what kind of security do you build in. And and some of those questions about their software development processes, their secure development life cycle methods, and their Secure by Design techniques. And if you do that, all of a sudden, I think you have a really good feel for what else you may need to do in your own operations to help fortify where there may be weaknesses. I think ultimately, you wanna help affect the security that your suppliers build into the systems they ship.

[Joe] If you can do that, then your systems will be protected, and society will be better off.

[Paul] So, Joe, that covers, if you like, the Secure by Demand side where the person who’s actually fronting up the money and the location for the devices says, show me what you’re doing. Now if you are a vendor and you’re asked for a Software Bill of Materials, if you can’t come up with that Software Bill of Materials, A, you probably should be able to, and B, if you’re going to sell into Europe, the Cyber Resilience Act that’s coming into force soon says, thou shalt jolly well learn how to come up with the Software Bill of Materials. What should your first steps be so that you can actually get that complete recipe? Because they’re not like a cake which might have 12 ingredients.

[Paul] There could be hundreds or even thousands of distinct components that mix in in multiple steps of the supply chain. So where do you start from the Secure by Design side?

[Joe] So you can certainly analyze your source code. You can certainly analyze the binaries, but there is an optimal spot to generate a Software Bill of Materials, and that is as the software product is getting produced. You could look at the recipe and say, oh, yeah. I could see where I might have some security vulnerabilities when I bake this cake. Or you could look at the finished cake and say, I see the icing. I bet there’s something underneath the icing that’s looked really good. They don’t really know until you cut open and eat it and when you find those three flies in the cake.

[Joe] In the software development process, especially in these embedded systems that comprise the technology in critical infrastructure, essentially, you take source code, you compile it, and you produce a binary. During that compilation process where you take all the intended instructions and all the source code from the developer, and the compiler pulls in other components and dependencies and third party libraries and all that good stuff. That’s the moment to generate the Software Bill of Materials.

[Paul] Right.

[Joe] Because that’s where you have ground truth of what is actually ultimately going into that binary.

[Paul] So you get a much richer, much more precise picture of what could go right and what could go wrong.

[Joe] And some people will argue, and I am one of them, you should produce the Software Bill of Materials as close to the moment when you produce that software binary that you’re going to ship to your customers. And the reason for all of this then, you asked about, well, what should the product manufacturers be doing? What should be the supplier’s approach to all of this? There are a couple items, but one of them is thinking about what your customer’s needs are. And if you are shipping technology to go to all forms of wastewater systems across, say, The United States where there’s, I think it’s 50,000 wastewater systems in the country, you have to realize not all of them have the same level of security on their side, so you can do more.

[Joe] And so I think it’s good customer service to start to really analyze what’s going in these software products so you can reduce downstream effects when a cyber actor tries to compromise the system.

[Paul] So, Joe, at the risk perhaps of seeming crassly commercial, perhaps you’d like to say something briefly about a couple of products and services that RunSafe does provide. One, building that bill of materials automatically, helping you to do that, and the other, building your software in a way that makes it more resilient to exploitability even if you have inadvertently their vulnerabilities in.

[Joe] At RunSafe, we took a philosophical approach to disrupt the economics associated with cyberattacks. A lot of these attack groups, like the CyberAv3ngers, are persistent.

[Joe] They might spend six months analyzing and developing their exploit and figuring out their attack methods and how to get on systems and things like that. And what we wanted to do was make it so frustrating that every time they go back and spend another six months on a target device, they get thwarted. And so that kind of approach means you’re disrupting that very economics. You’re using that persistence, which is maybe one of their strengths as one of their weaknesses.

[Paul] So in other words, they might spend three months getting an exploit to work against one instance of the valve actuator that they’ve bought and they’ve got in their lab, and then they go and try it on the other 999,999, and they realize, oh dear, we need to re tailor the exploit.

[Paul] You haven’t disrupted the determinism because the devices will still perform correctly, but you’ve disrupted the ease with which they can find a one size fits all attack.

[Joe] Right. And so if you take the Unitronic’s example then, if they had, say, security mechanisms built into their systems, one, they could save some time with their own developers. Eliminating a whole bunch of vulnerabilities from being exploited even when a patch is not available. Two, they could be enhancing their customers’ operations as well, reducing downtime and ensuring that systems aren’t disrupted.

[Joe] With that in mind, that’s a philosophical approach to the technical thing that we did, which is insert security at build time. That security gets invoked on a device when it loads out in the field in that wastewater system for the benefit of runtime protection. And so it’s a very simple step for developers, for those product manufacturers, for those companies that ship the technology to their customers and critical infrastructure to enable security when they’re baking the cake, so to speak, from earlier.

[Paul] And it doesn’t require a super modern, super special compiler. It doesn’t require all sorts of extra compiler options that generate all sorts of extra code that could interfere particularly with real time devices that can’t tolerate additional delays and additional checks.

[Paul] The same code is running. It’s just running in a sufficiently different way that you would assume that its performance will be the same, but its exploitability will be sufficiently unpredictable that an entry to one will not be an entry to all.

[Joe] And that is an asymmetric shift in cyber defense on the economic side and on a technological side. And so that same point where you can add in the security happens to be that exact same point where you can build with RunSafe a Software Bill of Materials that is complete and correct and accurate.

[Paul] Because it’s looking at what’s going into the cake, it’s not sampling the cake and trying to guess from all the chemical reactions that happened during the baking what was there beforehand.

[Joe] Yep. All we wanna do is sprinkle a little pixie dust as you’re producing that cake so that that cake is resilient down the road. And maybe that’s not quite the right analogy. But with that said, we wanna make it so simple that you can add security into your technology systems so they are resilient when they’re out in the field.

[Paul] So it should be fast, efficient, and safe. Pick all three of three.

[Joe] Yeah. Take all three of three because we know projects are managed around budget, cost, and scope. And so if you can not disrupt the budget, not disrupt the scope, and, not disrupt the timelines themselves and still have a more robust resilient system from a security perspective than everybody wins, including your customers.

[Paul] Absolutely. Joe, I think that’s a great spot at which to finish. The idea that this really is something that affects all of us and that actually all of us can do, even if it’s only a little bit to help, all of us can actually make a difference, whether we’re a consumer, whether we’re a user, whether a supplier, a vendor, or whatever.

[Paul] So that’s a wrap for this episode of Exploited: The Cyber Truth. Thanks to everybody who tuned in and listened. If you enjoy this podcast, please don’t forget to subscribe, so you know when each new episode drops.

[Paul] Please like, share, and promote us on social media. Please be sure to recommend us to everyone in your team. Don’t forget folks, stay ahead of the threat. See you next time.